The goal of this writeup is to document my experience running Leading Computer-Based Labs as part of UCSB’s Teaching Assistant Orientation (TAO) 2025.

Introduction

I was provided with the slides from previous years’ iterations of this workshop, developed by Ethan Marzban, Zach Sisco, and other wonderful folks. Based on the existing materials, I inferred that the learning outcomes of this workshop were for attendees to:

- Understand the function and general format of computer-based labs at UCSB.

- Be aware of the logistics of running labs (locations, keys, communication with the instructor, etc.)

- Be able to lead and develop labs based on effective teaching principles.

- Be able to handle tricky scenarios that commonly arise during student interactions.

I largely adopted the same materials, while tweaking a few things here and there and trying some new stuff, some of which worked and others didn’t work so well. The slides I used can be found here.

The workshop was run twice, first at 10AM and second at 11AM. Both saw 30 participants. In each section, there were 3–5 she/her participants, with the remaining being he/him, which reflects the (unfortunate) gender imbalance in STEM.

This was my first experience teaching others how to teach. Compared to teaching technical knowledge, it required me to constantly improvise on the spot in order to respond to unanticipated questions from the audience. The workshop also prominently featured group discussion, a method I had rarely used in my own (upper-level, theoretical-leaning CS) classes. Needless to say, I learned a lot from this experience.

The outline of the workshop is as follows:

- Settling in

- Introductions

- Info dump: How to prepare for your first lab/section? How to lead successful labs/sections?

- What to do in tricky/difficult scenarios? (group activity)

- Questions, log attendance, and evaluate this workshop.

In what follows, for each segment in the outline, I will document, analyze, and reflect on what I did, what happened, and what I learned.

Segment 1 - Settling In

Here comes the first challenge: every participant is a new face both for me and for other participants, yet we will only cross paths for 50 minutes of our lives, after which we’ll be unlikely to meet again1. Since the last 1/3 of the workshop is group discussion, I needed to break the ice by the time that happened. Luckily, the participants were all in good spirits and willing to contribute, probably due to a combination of (1) they could sense that I’m a newbie at this job, so they were extra supportive of whatever I did/said, (2) some of the opening activities did help build a welcoming and inclusive environment.

Not possible in this case.

I arrived 15 minutes before the start of the workshop to both physically and mentally prepare myself: setting up the projector, writing down the names of 8 cute animals on the whiteboard (I’ll discuss this later), and greeting participants who slowly trickled in. Luckily, in both the 10AM and 11AM workshops, there were at least 1–2 people who knew me (because they attended the CS dept’s orientation on the previous day where I was a speaker). This gave me an easier chance to start some chitchat (admittedly not my strength), which led to more small talk among the participants themselves.

As a side note, I originally planned to connect my own laptop via HDMI, but I did not figure out how to switch the source from the Lectern computer to my own. Luckily, Olga and her team logged into the Lectern for me beforehand, so that I could run Google slides on the Lectern computer instead. If whoever is reading this is planning to run the workshop in ILP classrooms again, just note that the switch pad is attached to the front panel of the Lectern (facing yourself) around knee-level, which can be easy to miss.

I delayed the start of the workshop by 2 minutes to allow the last couple of participants to arrive, scan the QR code to log attendance, and settle in. I have come to understand that delaying the start of any event at UCSB by a few minutes is an unspoken ritual. So I guess it helps to get new TAs acquainted with this wonderful tradition.

Segment 2 - Introductions

I first asked the participants to choose from one of the eight animals they would like to be reborn as, without explaining the reason behind this question2. The choice would later be used to divide the participants into groups for discussion. I learned this trick from Olga Faccani and Lisa Berry, and found it an effective way to both signal to the participants that the vibe of the event is informal (depending on the prompt), and to act as a “Chekhov’s gun” device that gets the participants hooked since they know something will happen but they don’t know what will happen.

I then quickly went over the outline of the workshop. I explicitly acknowledged the fact that there would be a lot of info dumping in the middle segment where I was obliged to go over the logistics of running labs. I promised them that in the last part we would discuss a couple of tricky TA–student interaction scenarios that I personally (and other TAs I know) have experienced, which hopefully made it less painful to sit through the logistics part.

After introducing myself, I then had each student introduce themselves, including their name (and pronouns if desired), their department, their choice of animal to be reborn as, and their answers to two prompts:

- When was the first time you started programming?

- What’s your biggest concern/worry about teaching computer-based labs? Everything except for the second prompt was used in the previous year’s workshop.

In retrospect, this segment took way too long and left me little time for the interesting bit on group scenarios, as reflected in the participant evaluations. I simply did not expect how much time it would take for all 30 participants to introduce themselves. In the 10AM section, the last participant finished their introduction at around 10:25AM. So we were already halfway into the workshop, arguably without covering any “real” stuff. Having learned this, I tried to rush the introductions in the 11AM workshop (which I felt made the vibe worse due to less follow-up: it’s like throwing a coin down a watered well vs. an empty well).

I wonder how last year’s workshop speaker was able to get through this segment quickly, since I reused the previous workshop’s introduction segment.

Nevertheless, I do think the prompts (especially the second one) are valuable in giving me a sense of where the attendees come from and what they are most interested in getting help with. In the next iteration, a change of format while still collecting these “worries” can be something to think about. I’ll propose an idea very shortly.

That said, I feel these introduction prompts can very well be used in cases where it’s ok to spend more time on getting to know the students (like a quarter-long course). Here are some of the things I learned from this segment:

- Always take quick notes whenever a student speaks: this not only gives them the impression that you genuinely care about them, but also provides valuable references for review after the class. I learned a lot from participants’ introductions, especially their responses to the second prompt (will discuss shortly).

- Always follow up with a student response. (As obvious as this might sound, I had not been very good at this in my teaching.) I felt that the follow-ups really helped to make the general vibe warmer. Unfortunately, doing so took a long time during the 10AM workshop, so I cut back on the amount of follow-up for the 11AM workshop, which visibly made the vibe at the 11AM section less warm than the 10AM section.

- The second prompt, which I probably stole from Lisa and Josh in Grad 210, was great. The question is not “do you have any concern or worry,” but instead “what concern/worry do you have.” This prompt (1) completely normalizes the unease the participants might feel, and signals to them that they were here precisely because they have these completely justified worries; it also gave me plenty of opportunities to follow up to affirm that whatever they worried about had personally happened to me and other experienced TAs, (2) it gave me plenty of anchor points to tie whatever I talked about later in the workshop back to these genuine worries that the participants were having, making the workshop appear more relevant to the participants (and likely helped with engagement when I followed up that “we’ll talk about this concern of yours shortly!”).

Due to the time limit, I think future iterations of the workshop can skip the one-by-one introductions completely, making more room for group and class discussion. However, I still think it’s possible—and valuable—to collect responses to the second prompt. Here’s a trick to try in the future (which I learned from Lisa): at the beginning of the class, have the participants fill out a very short, anonymous Google form. In this case, “your department” + “your biggest worry” should suffice. Then, in front of the class, cluster all responses into major categories – by hand or using LLMs which are actually not bad at this. The speaker can briefly comment on each category: go through some representative worries in each category, validating them while also foreshadowing that they’ll be discussed very soon.

Of course, I didn’t get a chance to try out this method, but here are the results I manually collected in class. I hope these results will inform how to improve future iterations of the workshop by responding to participants’ academic backgrounds and real concerns.

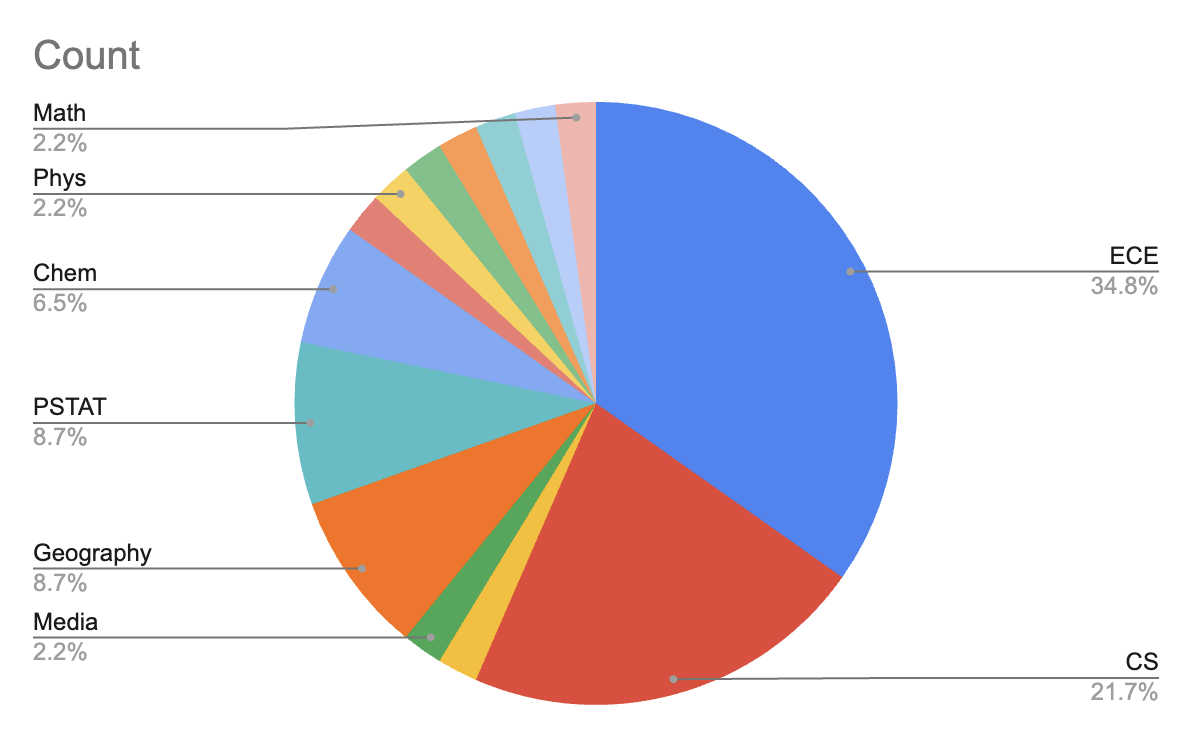

Department representation

Here’s a pie chart of represented departments across two workshops (I definitely have missed a few departments/schools that I’m ignorant of). The two workshops had roughly the same distribution:

So the top-5 most represented departments are:

- Electrical and Computer Engineering: 34.8%

- Computer Science: 21.7%

- Geography: 8.7%

- PSTAT (Applied Probability and Statistics): 8.7%

- Chemistry/Chemical Engineering: 6.5%

I expected the overwhelming majority to be CS, which turned out not to be the case!

Biggest Worries

Interestingly, the 10AM and 11AM workshops had distinctively different flavors of worries/concerns about leading labs! (Probably because later responses were influenced by prior ones, which isn’t unexpected because many of the prior responses are so common that many would find themselves agreeing to them.) I would categorize concerns voiced in the 10AM workshop as leaning towards “not being able to teach/answer questions effectively,” while tech issues were a pretty common theme among 11AM participants.

At the end of the document, I will attach the list of all concerns (that I can still decipher from my terrible and incomplete handwriting—damn is stenography hard). Broadly, these concerns fall into the following categories:

- Personal knowledge & experience gaps. Examples:

- Not being able to debug (identify the root cause of bugs that students run into)

- Not being able to solve the lab themselves

- Lack of coding experience themselves

- Equity, pacing, and student management. Examples:

- Making sure everyone is on the same page

- How to split time between different students, e.g., when some are clearly falling behind and need extra help

- Going too fast in explanation

- Tech issues (software and hardware):

- Fixing tech issues live (e.g., projector not working)

- Troubleshooting inconsistent or broken software installations

- Unable to reproduce expected results in live demos

A couple of participants commented that their concerns stemmed from their own experience as students, which I can imagine explains most of the concerns above. My interpretation is that as students, they saw their own TA running into many of the same issues, so they fear that they will repeat the same mistakes.

Later in the workshop, I was able to tie some of the stuff I talked about back to their concerns. Some of them were unaddressed, or could only be addressed through experience (in my opinion). But it might be a good idea to adapt future iterations of the workshop to take into account some of these issues; simply incorporating them into group discussions on tricky scenarios could be great.

Segment 3 - The Info Dump

I added new slides on general lab format and quickly walked through a sample lab from UCSB CS 8, showing the participants what the expectations are for TAs. This new segment was well received, with 3 participants explicitly commenting on it as one of the takeaways from this workshop.

To be frank, I dislike lecturing and slide-reading, but before the workshop, I couldn’t really think of a better way to deliver the logistics stuff (“you need to get THIS key and THIS login account from THIS office if you want to use THIS computer”) and “5 tips of running labs.” I did try injecting more personal stories, warnings, and coercions especially during the part on “don’t touch the students’ keyboard!” in order to keep the participants from falling asleep.

Sadly, at least one participant was struggling to keep their eyes open. So I quickly went through the slides so that I could get to the more interesting bit. Nevertheless, participants did find this segment useful according to the evaluation results, with 9 participants saying that “how to field student questions/help them” was the top takeaway, and 6 participants saying that the bit on logistics (physically visiting the lab before their first section) was helpful. Participants (3 of them) also appreciated my impassioned call to not touch the keyboard.

In retrospect, here’s how I might have done this segment differently. One way I can think of, which ties nicely to the next segment on scenario group discussion, is to recast some of the logistics and practical tips into imaginary scenarios. Maybe go through a few of them with the whole class: pose the scenario, let them think for a bit, take volunteer responses, then show a slide with your takes (instead of piling them into group discussion, since these are important enough that everyone should get exposed, whereas there are already so many scenarios for group discussion that participants can’t get through all).

Segment 4 - Group Scenarios

This is the fun part—7 participants voted this as the one thing they learned—though covered in much less time than I would have liked. I inherited the group discussion on 8 tricky scenarios from Zach and Ethan.

However, I felt while preparing the workshop that something was ostensibly missing, the elephant in the room—AI use in the classroom. So before dividing the class into separate group discussions, I had the whole group pause and think about the following scenario:

After I read the scenario to the participants, I heard some awkward laughs and saw some nods. One of the participants said that they had run into a similar situation with students before. I said I did too—it was during one of my office hours and the student was clearly doing it for “fun” (seeing whether ChatGPT could even solve the problem at all). But I felt something like this, or something perhaps worse, would be bound to happen at some point during the participants’ TA career, now that “everyone is cheating their way through college”.

A participant suggested that we check the AI policy section on the syllabus. I then followed up with another question: “what if you don’t see any section on AI policy?” This led nicely to the next slide on academic integrity and plagiarism in the context of AI, and how instructors, not the TAs, were responsible for arbitrating academic integrity violations. We had more discussion about similar cases that participants suggested (which I forgot to write down sadly), but I felt the discussion was productive. Indeed, in the participant evaluation, this segment received the most votes for “the one takeaway from the workshop” (10 votes out of 41 unique responses), so I would consider the addition of this AI scenario a success.

I then handed out worksheets that described 8 more scenarios, and asked participants to form discussion groups based on their choices of animal. (The 10AM section had little time left, so I instead had them talk to their neighbors in pairs.) I walked around the room to eavesdrop on some of the groups.

The final planned segment, where we would all go through each group’s notes, had to be dropped since we ran out of time. I thanked them for coming, bid them farewell, and stayed around for a couple more private questions. A common one was: are you going to post the slides? To which I answered that the TAO team would share the slides with everyone later.

Reflection on Participant Evaluation

At the end of the workshop, participants were invited to submit an anonymous survey to respond to the following questions:

- How useful was this workshop (on a scale of Very useful, Mostly useful, A little bit useful, Not useful)?

- What is one thing from this workshop that you might use in your teaching practice?

- Please provide any constructive feedback to the workshop leader about the workshop content or format.

Overall Usefulness

Here are the aggregated responses:

- Not useful: 0

- A little bit useful: 4

- Mostly useful: 13

- Very useful: 16

So if we assign a score of 1 to “Not useful” and 4 to “Very useful,” the average usefulness is about 3.36/4. Not bad, but definitely plenty of room for improvement!

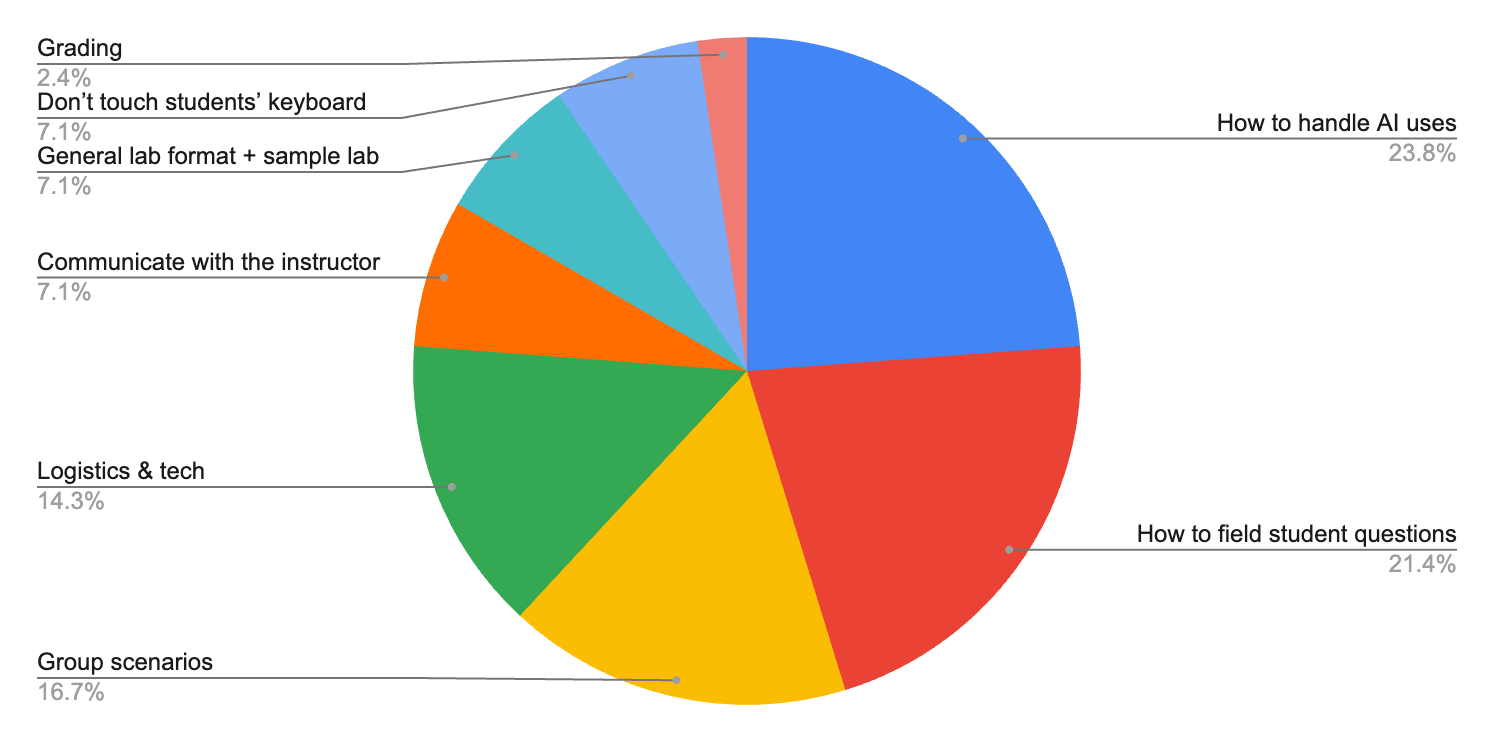

One Takeaway from This Workshop

- How to handle AI uses: 10

- How to field student questions: 9

- Group discussion on handling tricky scenarios: 7

- Logistics & how to use classroom tech: 6

- How to communicate with the instructor: 3

- General lab format explained through an example lab: 3

- Don’t touch students’ keyboard: 3

- Grading: 1

Constructive Feedback

- More time/less materials/less time spent on introductions: 12

- “Great”: 4

Conclusion

Overall, this was a very unique and valuable learning experience for me, and I hope the participants learned a thing or two as well. I plan to rerun the same workshop as part of the CS dept’s TA training course next month, and I’ll try to incorporate some of the ideas for improvement that I jotted down here, as well as the participants’ feedback.

-

This was unlike every teaching experience I’ve had, where in each instance I was given at least a couple of weeks to continually teach the same group of students, so I had the luxury of spending the entirety of the first few weeks to break the ice and establish trust with students. ↩

-

In retrospect, I definitely should have reframed the prompt as “which animal would you like to take care of as a pet (assuming it’s legal and humane to do so)”. Across both workshops, owl was the overwhelmingly popular choice, because it was “the apex predator that eats all of the other animals,” to quote one of the participants. I was completely blindsided by this response, as I expected them to choose simply based on how cute they thought each animal was. Alas, we STEM people are sometimes too rational. (Coincidentally, there were several Spanish native speakers in the room, who joyfully corrected my terrible pronunciation of axolotl.) ↩